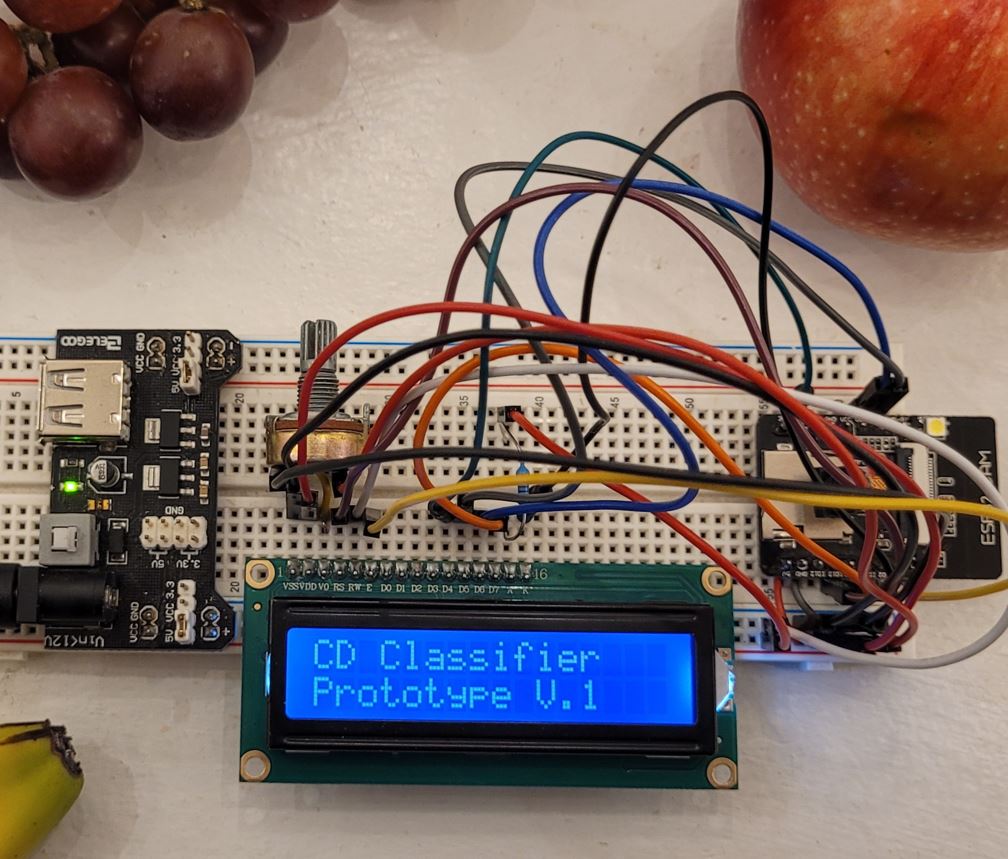

this is CDI.

Machine Learning | Embedded Systems

Congenital Disorder Identifier employing computer vision to detect congenital diseases in resource-constrained areas, showcasing self-contained operation and local model training.

Congenital Disorder Identifier employing computer vision to detect congenital diseases in resource-constrained areas, showcasing self-contained operation and local model training.

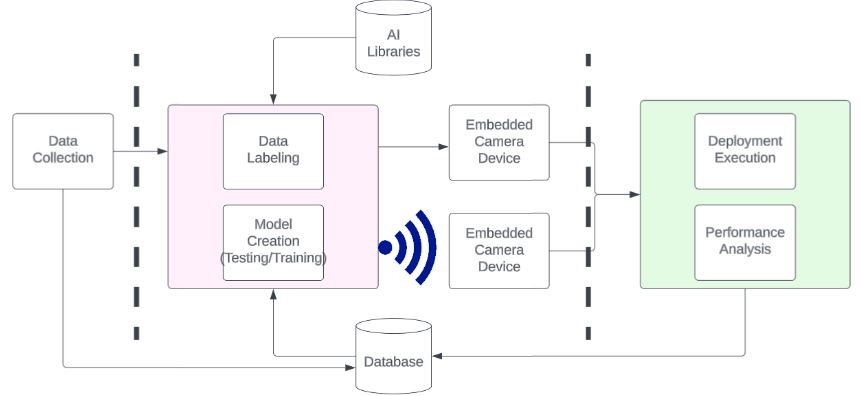

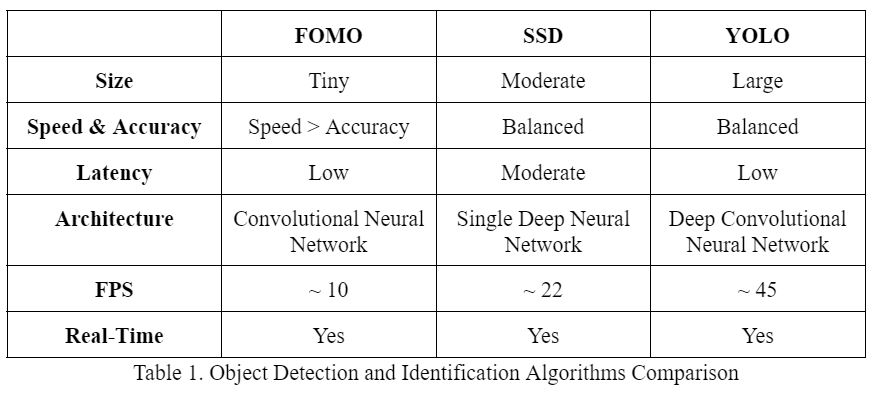

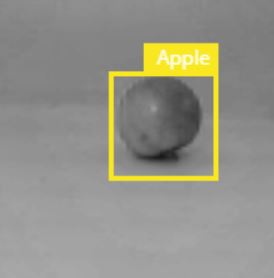

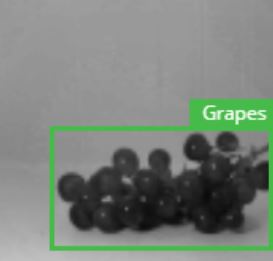

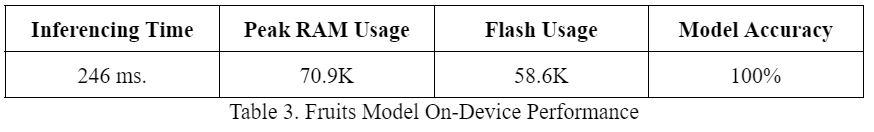

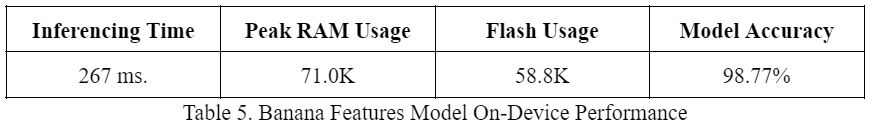

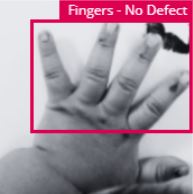

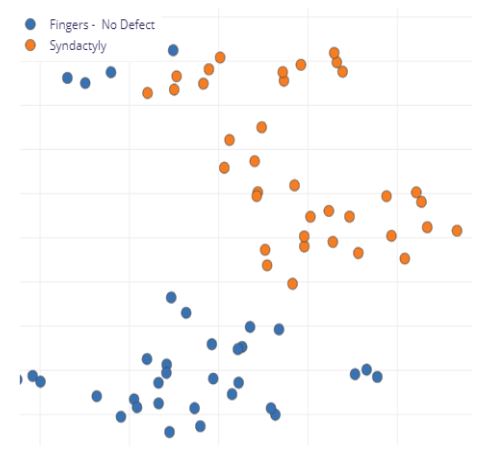

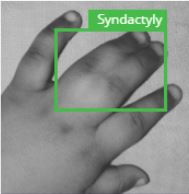

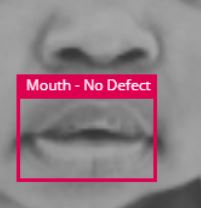

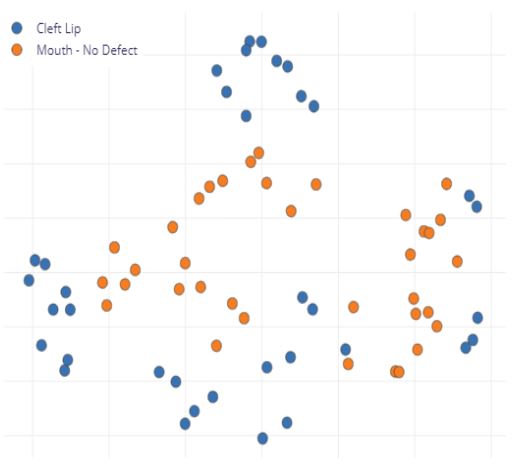

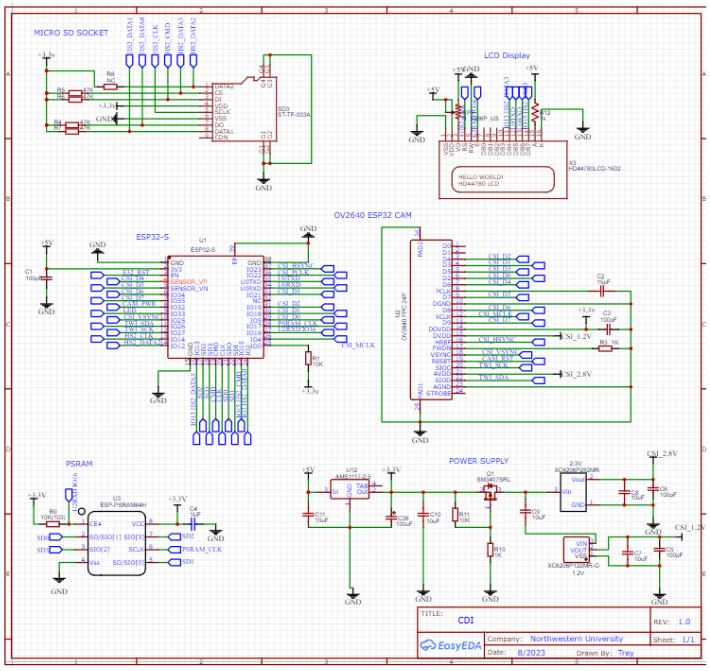

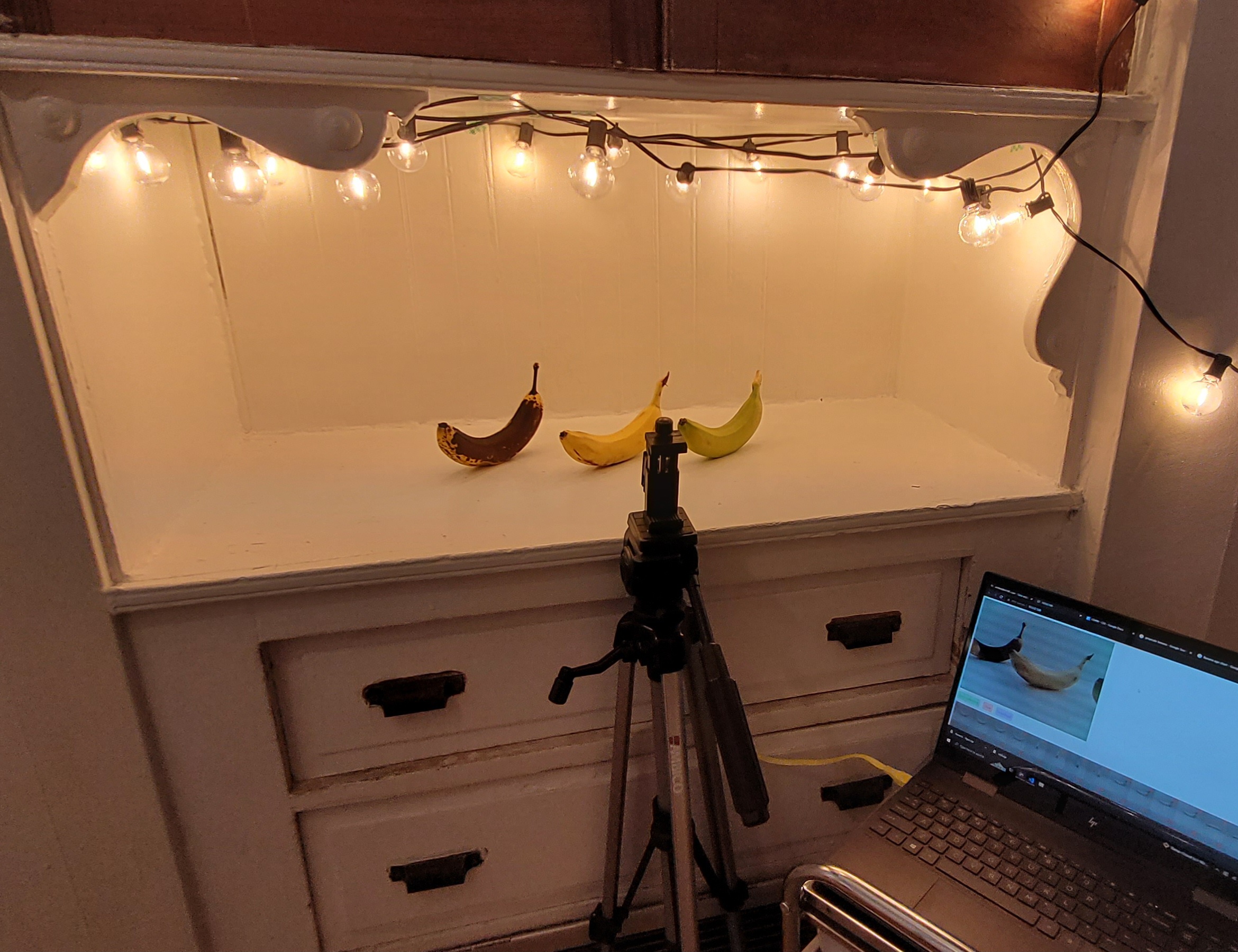

The project focuses on the development of an embedded ML system, termed the "Congenital Disorder Identifier, Embedded Camera." Employing a computer vision ML model (FOMO MobileNetV2 0.1), the system performs complex visual tasks to identify external congenital diseases. Notably, it operates as a self-contained, portable device showcasing machine learning at the edge, eliminating the need for server connectivity. This design addresses the diagnostic gap in medical care in areas lacking network infrastructure, providing a fully-fledged ML solution for resource-constrained environments. The device conducts continuous data collection, model training, and deployment locally, emphasizing its autonomy and suitability for regions with limited network access. The project aims to bridge healthcare disparities by enabling on-site, advanced diagnostic capabilities in underserved areas.

The "Congenital Disorder Identifier, Embedded Camera" is a self-contained, portable ML device for computer vision, enabling the identification of external congenital diseases without reliance on server connectivity.